AI in genomics

AI has been applied to various steps in clinical genomics, including variant calling, genome annotation, variant classification, phenotype-to-genotype mapping, and genotype-to-phenotype prediction. AI algorithms improve the accuracy of variant calling by learning from sequencing data and detecting complex dependencies. They enhance genome annotation and variant classification by integrating prior knowledge and predicting functional effects of genetic variants. AI algorithms can map phenotypes to genotypes using facial image analysis, EHR data analysis, and other techniques, leading to accurate prioritization of candidate pathogenic variants. Additionally, AI algorithms can predict disease risk by integrating genetic and non-genetic risk factors, improving risk stratification models for common complex diseases.

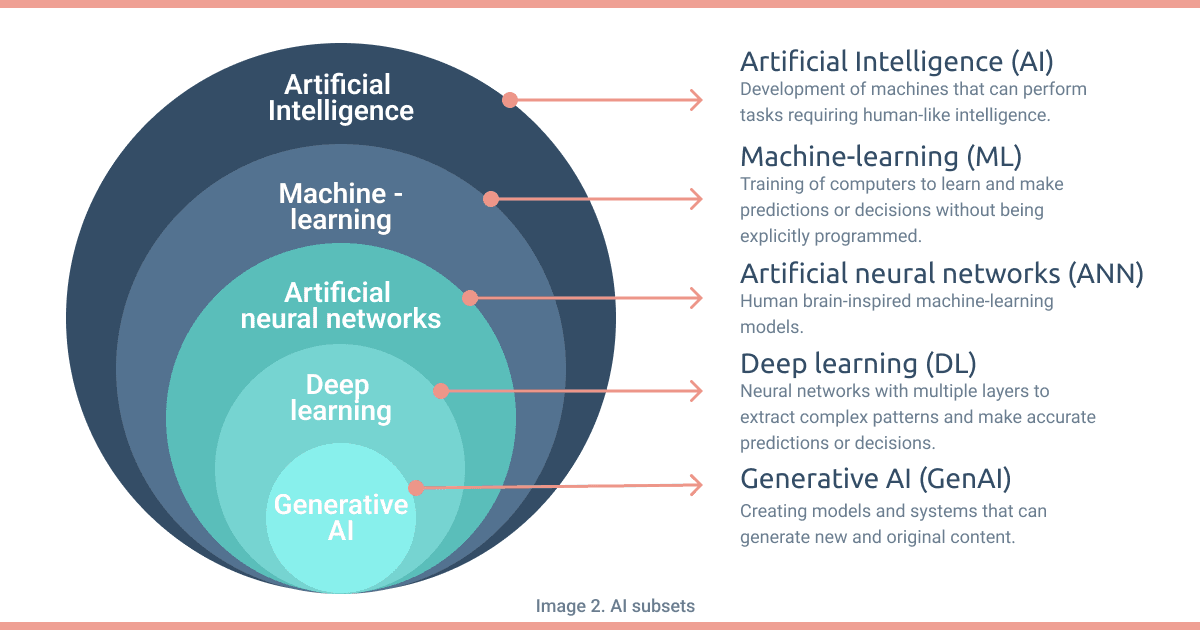

Clinical genomics utilizes especially Deep Learning to analyze large and complex datasets - such as nucleotide sequences - and to execute tasks like variant calling and annotation, variant impact prediction and phenotype to genotype mapping [4].

Another application of AI consists in leveraging computer vision techniques. Similar to detecting pixel patterns in images, these methods can be employed to identify recurrent motifs in DNA sequences [4].

Regulatory and ethical challenges in AI: Ensuring transparency, explainability, and accountability for trustworthy clinical diagnostics

The use of these algorithms raise a number of regulatory and ethical challenges about the sourcing and privacy of the data used to train the algorithms, the transparency of the underlying algorithms themselves and the liability associated with prediction errors.

AI is often criticized for being a ‘black box’ system that produces an output without any explanation or justification. An ideal AI-based clinical diagnostic system should deliver accurate predictions and provide human/interpretable explanations of those predictions. This is of crucial importance in the case of mistakes made by AI systems, with an impact on patient diagnosis and treatment. Within this context, it may be challenging to establish accountability.

Therefore, ensuring that AI systems for variant interpretation are transparent and accountable is essential for building trust. This can be achieved through a variety of approaches, such as developing explainable AI techniques and providing users with access to the system's decision-making processes.

Explainable AI (XAI) techniques, aiming at making AI models more transparent and interpretable, provide insights into how models make decisions, helping human users in understanding the reasoning process behind those decisions. Some common XAI techniques include feature importance, rule-based explanations, LIME, SHAP. These techniques help identify influential features, generate human-readable rules, approximate complex models with simpler ones, and assign importance values to features.

Trustworthy AI

Trustworthy AI refers to the development and deployment of artificial intelligence systems that are reliable, transparent, ethical, and accountable. The idea behind trustworthy AI is to maximize its benefits while minimizing or preventing risks and dangers. It emphasizes that people and organizations can fully harness the potential of AI if they can trust its development and use. An AI system can only be trustworthy if it does not generate ethical problems, which implies that it does not discriminate unfairly, violate data protection or create unfair distribution of resources [5].

For this reason, it becomes necessary for policymakers to determine the desired level of intervention in the development of industries utilizing AI. At the same time, industries must consider the commercial implications of risk profiles. It is within the framework of this delicate interplay between policy and industry that the European Commission introduced the Assessment List for Trustworthy Artificial Intelligence (ALTAI) [6].

The ALTAI is a guideline grouped by the ethical principle under assessment, for evaluating whether an AI system adheres to the specified requirements.

There are seven key requirements that AI systems should meet in order to be considered trustworthy [7]:

1. Human oversight: AI systems should be designed to uphold and facilitate human decision-making, particularly in critical or sensitive situations, respecting human autonomy.

2. Technical robustness and safety: built-in fail-safes and mechanisms to prevent unintended harm.

3. Privacy and data governance: clear policies and practices in place for data collection, ensuring the safeguarding of privacy, data quality, integrity and accessibility.

4. Transparency: apparent explanations of how they make decisions and recommendations.

5. Diversity, non-discrimination and fairness: taking into account different perspectives and ensuring fairness in decision-making.

6. Environmental and societal well-being: promoting sustainability and social well-being.

7. Accountability: evident lines of responsibility and mechanisms for addressing errors or unintended consequences.

Achieving trustworthy AI requires collaboration between various stakeholders, including researchers, developers, policymakers, and users. It involves interdisciplinary approaches that consider technical, ethical, legal, and social aspects to ensure that AI technologies are developed and used in a responsible and beneficial manner.

Upcoming AI Act

The EU aims to regulate AI as part of its digital strategy, ensuring favorable conditions for its development and utilization, which can lead to numerous benefits in healthcare, transportation, manufacturing, and energy.

The European Commission proposed the first regulatory framework for AI in April 2021, proposing the analysis and classification of AI systems based on their risk levels. Once approved, these regulations will mark a significant milestone as the world’s first set of rules specifically tailored for artificial intelligence. The goal of the AI act is to create a legal framework by laying down obligations which are proportionate to the level of risk imposed by AI systems [8].

The European Parliament has adopted its negotiating position on the AI Act, aiming to ensure that AI development and usage in Europe align with EU rights and values. These rules prioritize elements such as human oversight, safety, privacy, transparency, non-discrimination, and social and environmental well-being. On June 14, 2023, MEPs approved the Parliament's negotiating position on the AI Act. The subsequent step involves engaging in discussions with EU member states in the Council to finalize the legislation, with the objective of reaching an agreement by the end of 2023.

The central element of the AI Act is a classification system that evaluates the potential risk of an AI technology to the health and safety or fundamental rights of individuals. This framework categorizes AI systems into four levels of risk: unacceptable, high, limited and minimal [8].

The key provisions that are expected to be included in the AI Act are:

1. Risk-Based Approach: the regulatory demands placed upon AI systems will be commensurate with the risks they present. This entails categorizing AI systems into various risk levels, taking into account factors like their potential for harm and the extent of human supervision needed.

2. Prohibition of Certain Uses: it encompasses applications such as social scoring, biometric identification, and the utilization of lethal autonomous weapons.

3. Transparency and Explainability: it entails granting access to the foundational data and algorithms, alongside offering a transparent and comprehensible explanation of the decision-making process.

4. Data Protection: AI Act is expected to incorporate the principles of the EU's General Data Protection Regulation (GDPR), meaning that AI systems will need to comply with strict data protection rules.l well-being: promoting sustainability and social well-being. Accountability: evident lines of responsibility and mechanisms for addressing errors or unintended consequences.

The AI Act is expected to represent a major milestone in the regulation of AI systems in the EU, and could serve as a model for other jurisdictions around the world [8].

The role of AI in the future is a topic of much debate as technology continues to advance at a fast pace. While there are concerns about the potential negative impacts of AI, it is clear that it has the potential to transform many areas of people’s lives for the better. To ensure that this transformation is a positive one, it will be important to consider and address issues such as data privacy, informed consent and bias, along with the development and implementation of AI systems that are transparent, inclusive and accountable.

eVai: transparent and explainable variant interpretation in AI-driven Clinical Genomics

In the rapidly evolving landscape of clinical genomics and AI regulation, eVai, enGenome’s variant interpreter, exemplifies the practical application of the key principles for a trustworthy AI: transparency and explainability.

As a tool designed to prioritize patient variants based on clinical and family history, eVai harnesses the power of AI, leveraging its award-winning Suggested Diagnosis feature, to propose the most probable diagnosis for the patient. A crucial aspect of eVai's approach is its integration of explainable AI (XAI) techniques, ensuring that the variant interpretation process is clear and interpretable. By providing transparent insights into the reasoning behind its predictions, eVai fosters trust among users, including clinicians and researchers.

eVai's transparent methodology ensures that its decisions can be easily understood and justified, thereby mitigating the "black box" perception of AI. In this manner, eVai ensures that its outcomes align with the highest ethical standards and adhere to the evolving AI regulations, such as the AI Act and the ALTAI guidelines.

To further safeguard patient data and privacy, eVai is designed with rigorous data protection measures in compliance with the EU's General Data Protection Regulation (GDPR).

enGenome’s variant interpreter represents a pioneering example of the application of transparency and explainability principles in the context of AI-driven clinical genomics.

Written by Floriana Basile